First lecture in the Distinguished Lecture series at the Institute of Computer Science

On 7 January, we welcomed Prof. Dr. Christian Kästner for the first lecture in the Distinguished Lecture series. Kästner is professor and head of the Software Engineering PhD programme at the School of Computer Science at Carnegie Mellon University. His research focuses mainly on software analysis and the limits of modularity, especially in the context of highly configurable systems.

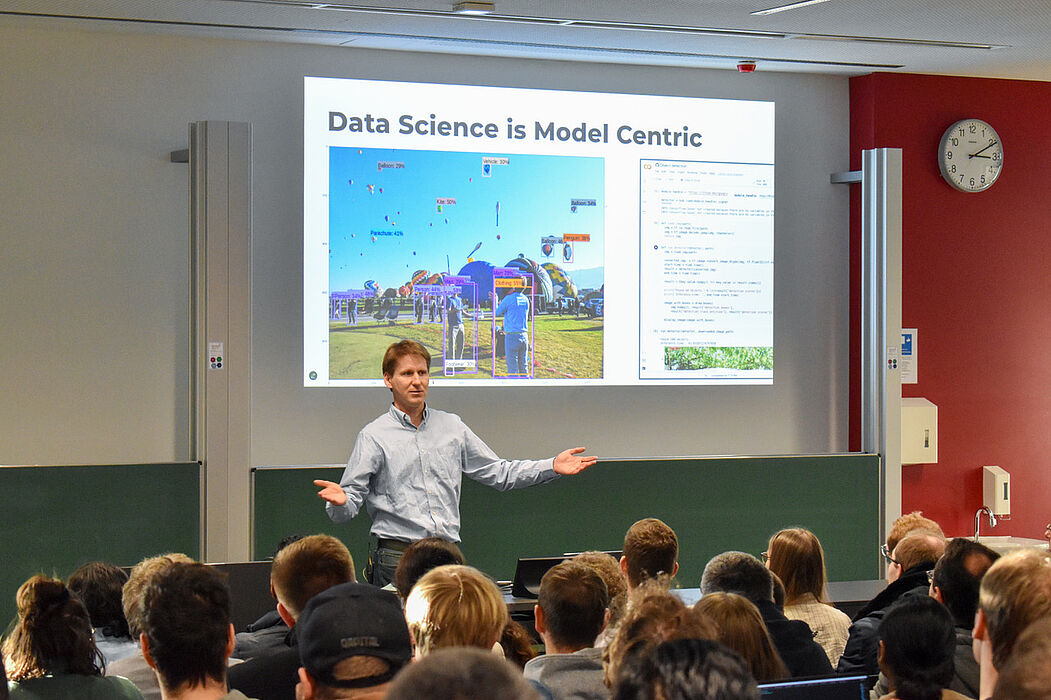

In his lecture at Paderborn University, he argued in favour of establishing more and better training at the interface of software engineering and machine learning as well as more system-wide research into building software systems with machine learning components.

In an interview with us, he answered questions about software engineering, machine learning and its application as well as his career:

What do you see as the biggest challenges when it comes to integrating machine learning models into scalable and reliable systems?

Models are unreliable components in software systems. Even a very accurate model will occasionally make mistakes, and this is generally accepted. For example, a model with an accuracy of 99.9% will still make a mistake 1 in 1,000 times, and it's not really predictable when that will happen. The key challenge is therefore to build reliable and safe systems with unreliable components.

How do the principles of software engineering differ in the context of traditional applications and machine learning systems?

Many things remain the same, but machine learning brings with it more complexity, partly due to the integration of components that are known to be unreliable. With the increased complexity, it would be wise to increase the rigour of the methods used to build systems, such as safety engineering and software safety practices. For the most part, we in software engineering have methods and decades of experience in developing complex, safe and secure systems, but many of these methods are expensive and not widely used outside of traditionally safety-critical systems. I think we need more of them. In summary, we can't allow sloppy methods to create software.

To what extent should the software engineering process be adapted when developing machine learning models that need to continuously learn or adapt to new data?

The main problem is that machine learning is much more like science than engineering. A project is far less predictable than a traditional software project. It is usually unclear whether it is possible to create a model for a specific task with acceptable accuracy and it is difficult to predict by when this can be achieved. This leads to many conflicts in software projects where machine learning experts and software engineers work together.

In addition, most machine learning projects do not take into account changes and continuous updates. This is due to machine learning education, where students work with fixed data sets and are never confronted with evolution or drift. Machine learning needs to plan for change and incorporate automation, such as automated testing and A/B testing. Much of this is in practice under the label MLOps and there are many success stories, but especially beginners are still struggling with it.

Which tools or methods from software engineering are particularly helpful in ensuring the scalability and maintainability of machine learning systems?

Distributed systems, A/B tests and canary releases (tests in production), safety engineering, software security methods such as threat modelling.

How do you deal with the fact that machine learning models are often "black boxes" in your research, while software engineering demands transparency and traceability?

Primarily by calibrating expectations. We have to accept that we are dealing with unreliable components that we will not fully understand, but that work on average. This is actually not so different from what we would expect from people performing a task. We need to move away from the unrealistic idea that everything can be specified and proven correct.

What are the most important considerations when testing and validating machine learning systems, especially compared to traditional software testing?

Understanding the system requirements and model requirements and their relationship. Anticipate errors, assess their consequences and mitigate model errors with safeguards around the model.

How should developers and engineers deal with the uncertainty and often unpredictable outcomes of machine learning systems?

Calibrate expectations, as mentioned above. As well as carefully consider where it is responsible to release software with an unreliable component and whether the remaining risk is acceptable.

Professor Kästner, what originally motivated you to become interested in software engineering and machine learning? And how have your research interests developed over the course of your career?

It all came from teaching. Originally, I wanted to convince colleagues to teach this in our Master's programme in Software Engineering, as many of our students go on to develop software products with machine learning components (mobile apps, web applications). This was a clear gap in our curriculum and also in teaching at most other places. When no one picked up on this, I decided with a colleague to do it ourselves.

When we started teaching, we learnt so much about the subject that we started to see interesting practice and research problems and started doing research.

Were there any particular professors or mentors who particularly influenced you in your academic career?

I don't usually pursue a long-term agenda, but am opportunistic about research opportunities. Many of these projects simply come about through conversations and collaborations. For example, my thinking on this topic was strongly influenced by discussions at a Dagstuhl seminar and by working with my co-lecturer Eunsuk Kang.

What advice would you give to young researchers or students interested in software engineering and machine learning?

a. Talk to practitioners.

b. Read beyond your own department - for example, much of the most interesting software engineering for machine learning literature is published at conferences on human-computer interaction, machine learning fairness and NLP, not necessarily at traditional software engineering conferences.

What still excites you about your research and teaching, and how do you see your own future in the field?

I see the impact in teaching and how students appreciate learning beyond the systems aspects rather than just focussing on models. As lecturers, we currently have a lot of leverage to shape responsible engineering practices (fairness, safety, security) for the next generation of engineers.

In general, I always enjoy continuously learning new things in research.

What experiences or insights from industry have changed your perspective on software engineering and machine learning in academic research?

Almost all discussions with practitioners show that the problems are probably not fundamentally new. Technologies change quickly, but the core problems remain. We have learnt that the core problems are not technological, but those of (interdisciplinary) collaboration, communication, requirements and processes. All old topics with a lot of knowledge, which in my opinion is even more important now with machine learning.

The "Distinguished Lectures" series of the Institute of Computer Science consists of high-calibre lectures and discussions with national and international personalities, which are intended to stimulate research at our institute and promote the exchange of knowledge between scientists. The event is open to all interested parties and has already got off to a successful start with Professor Kästner.